This is the first part of a 3 part article series. In this post, you will learn about the challenges when running a few services on the laptop of developers and others remotely.

One common pain point faced by developers who work on complex Kubernetes-based applications is that their laptops burn up when they try to run all services locally.

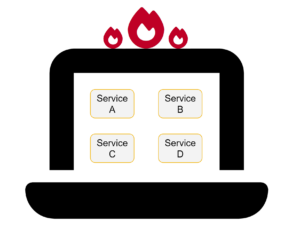

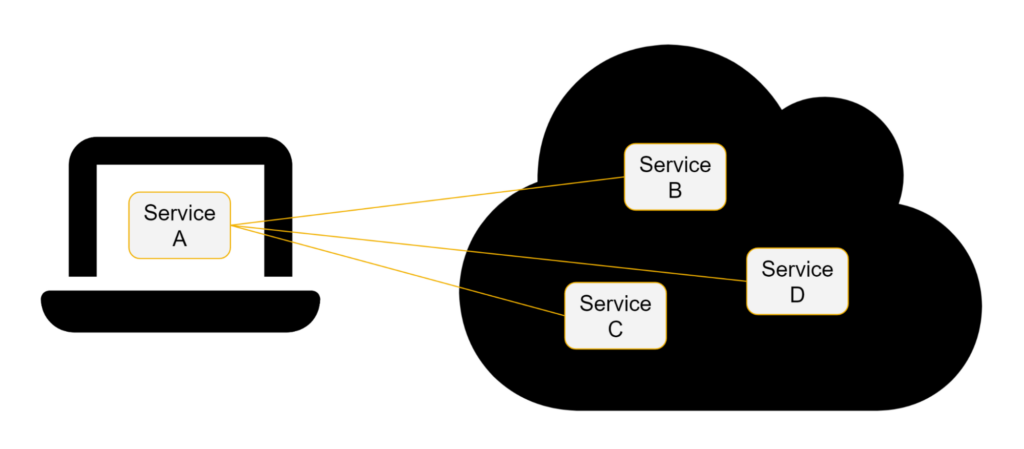

To deal with this, a setup has become common where developers only run one or two services locally, and connect to the other services on a remote cluster.

This has the advantage of

- Allowing developers to build, run, and inspect those components locally that they work on

- Without overloading their laptops with running many services locally.

However, while it is a great idea, there are challenges to this setup.

Challenge 1: Connecting local to remote services

It is possible to configure services in Kubernetes to connect to each other across network boundaries, e.g. via port-forwarding (docs, example) – however, doing this manually can be a pain.

Fortunately, it has been made a lot easier by tools that were specifically developed to support this use case. Telepresence is a tool built specifically to connect Kubernetes pods across networks. It creates a virtual network interface that maps the cluster’s subnets to the host machine when it connects. Mirrord uses a different approach, but with the same outcome: it makes it manageable to connect individual services running on different machines.

Challenge 2: Cluster sharing

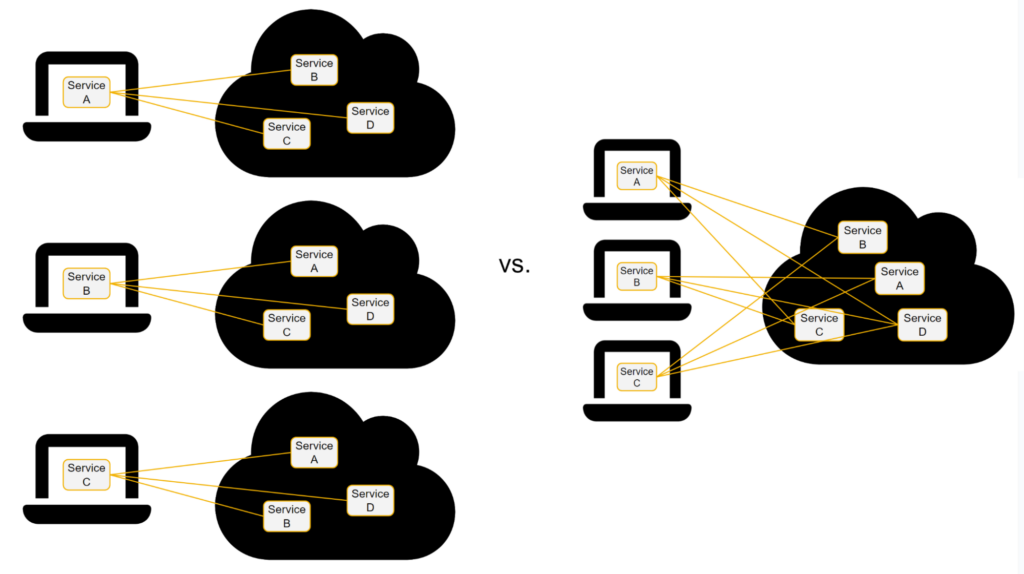

Getting the services to talk to each other is just one (complicated) part of the (even more complicated) entire puzzle. It makes it possible for one developer to connect their local service to one remote cluster that runs other services. That’s nice, but the general idea is to have one remote dev cluster that all devs can use and connect to. Otherwise, you’d have to run a dedicated dev cluster for each developer, which is expensive (and a pain to maintain).

Challenge 2a: Multi-tenant capabilities of remote services

If you want to have one dev cluster that many developers can share, your software needs to be able to support that. Specifically, each individual service needs to be multi-tenant capable, so that several other services can use it.

Multi-tenant capable services have awareness of which other services they are talking to, and which tenant (i.e. customer) these other services belong to. This must be implemented in a way that ensures that services do not leak data between tenants. For example, a statistics component must know which data belongs to which tenant in order to produce the right response about the right data for the right other service.

Even though it is best practice to implement microservices in exactly this way, it is often not the case. Most commonly, the complexities of adding tenant separation outweigh the perceived immediate benefits, leading to some or all services not being multi-tenant capable.

Services that are not multi-tenant-capable cannot be shared. This means that each developer would need a dedicated instance of each such un-shareable service. If only some services can’t be shared, it could conceivably be possible to share some services, and deploy other services dedicated for each developer.

This, however, is a pain to configure and manage and provides only part of the cost savings of sharing services, since a lot of services would still need to exist in several instances.

Getting to a point where a setup like this is sufficiently automated to be usable is worth the effort, though, because it enables iterative improvements where multi-tenant-support can be added to individual services step by step, incrementally increasing the cost savings of sharing services.

Side note: Multi-tenancy is beneficial not only for sharing services in development, but also for efficient scaling in production. The case is exactly the same in production as it is in development: any service that cannot be shared will exist as a dedicated service for each customer. They can be scaled up (i.e. several instances of that service can exist for one customer) but they cannot be scaled down (i.e. at least one instance of that service has to exist for each customer). This reduces the benefits of a microservices architecture significantly while also significantly increasing the complexity of managing it. If only some services can be shared and others can’t be shared, scaling must consider this, making it a much more complex undertaking.

Challenge 2b: Versioning & managing compatibility of services

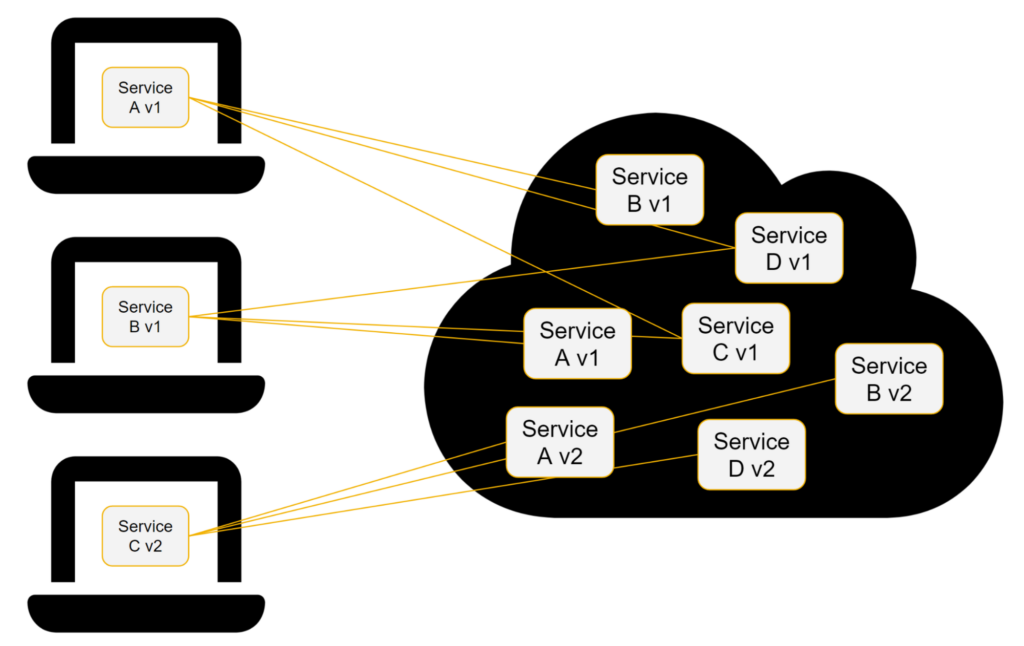

The next whopper is compatibility of services. If you have different teams working on different components, introducing a breaking change in one of the components would require either

- that both versions of that component are available on the dev cluster for other services to connect to – which requires version awareness, i.e. each service would need to be aware of its own version and which other version(s) of other services it is compatible with. Which, again, is best practice but in reality, a lot of software isn’t built for this.

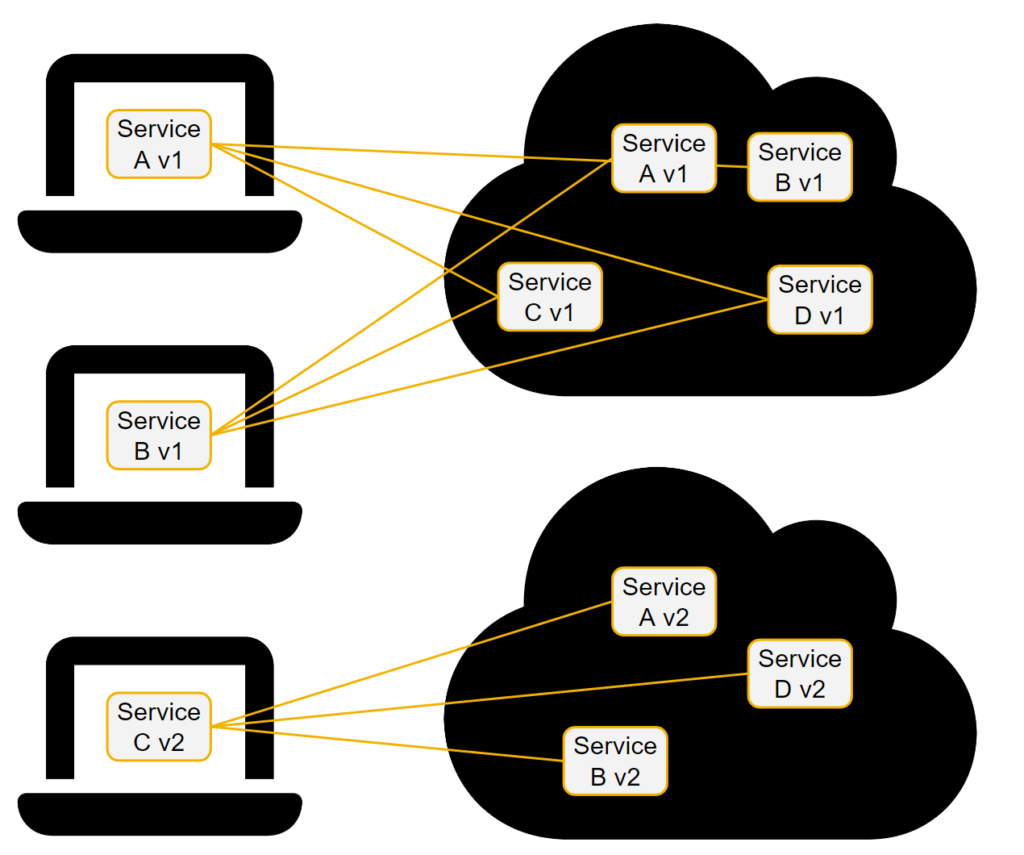

- Or you need separate dev clusters for different versions and each developer needs to know which cluster to develop against – which is easier to manage, but you’d need an additional cluster for each version that developers work on.

One way many companies choose to deal with compatibility issues is to avoid breaking changes at all cost and prioritizing backwards compatibility over most other aspects (such as technical excellence or user experience).

Unfortunately, this is often a way to move problems into other areas rather than solving them. Constraining the ability to introduce breaking changes leads to an accumulation of technical debt which makes every single additional change to the software more complex and costly.

This can become a cat-bites-its-own-tail situation: backwards compatibility with older versions that do not have multi-tenant capabilities makes it impossible to introduce multi-tenant capabilities, since those require fundamental changes to the structure and content of API requests.

Factorial complexities

Managing version compatibility becomes real fun when several services introduce breaking changes, or you need to be able to support (i.e. bug fix and develop in) several different versions of your software.

Considering each service’s version compatibility and shareability, you already end up with a large number of possible constellations that you’d need to support, even when considering only those two factors.

In reality, there are many more factors that need to be considered, for example

- dependencies like libraries in specific versions,

- operating system compatibility,

- different possible constellations of services (e.g. different DB

- backends or other constellations that your software might support)

- Other service-interdependence specific to your software

With each dimension of variability, the possible number of constellations in which your software can exist grows very quickly. Fortunately, all factors that affect compatibility of services with each other are much less critical than factors that affect if one service can be used by several other services at all, i.e. shareability.

Each factor that means one service is incompatible with another service would mean that that specific service has to exist in two (or several) different configurations. Each factor that means one service cannot be accessed by several other services means that it would have to exist once for each possible constellation of other services.

That’s the end of the first part of this series. In the second part we dive deeper into this topic. I’ll show you an example of how quickly factorials can grow when services are not sharable and what are the real costs of running everything locally.

Subscribe to the Cloudomation newsletter

Become a Cloudomation Insider. Always receive new news on “Remote Development Environments” and “DevOps” at the end of the month.